When a Picture Is Worth a Thousand AI-Generated Words

A story about artistry, generative AI and the future of human-based creativity.

Let me tell you a little story — something that happened to me recently and got me thinking about the subtle but very real risks of relying too much on generative AI.

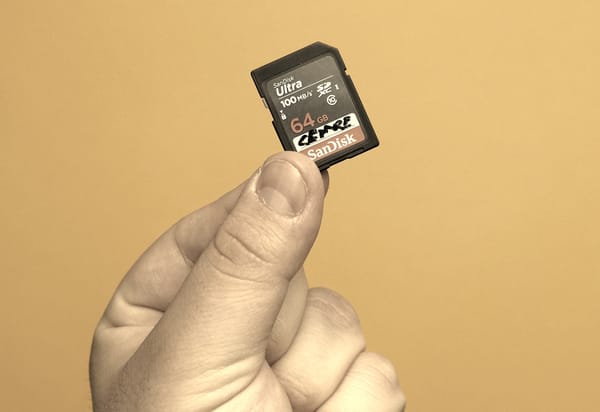

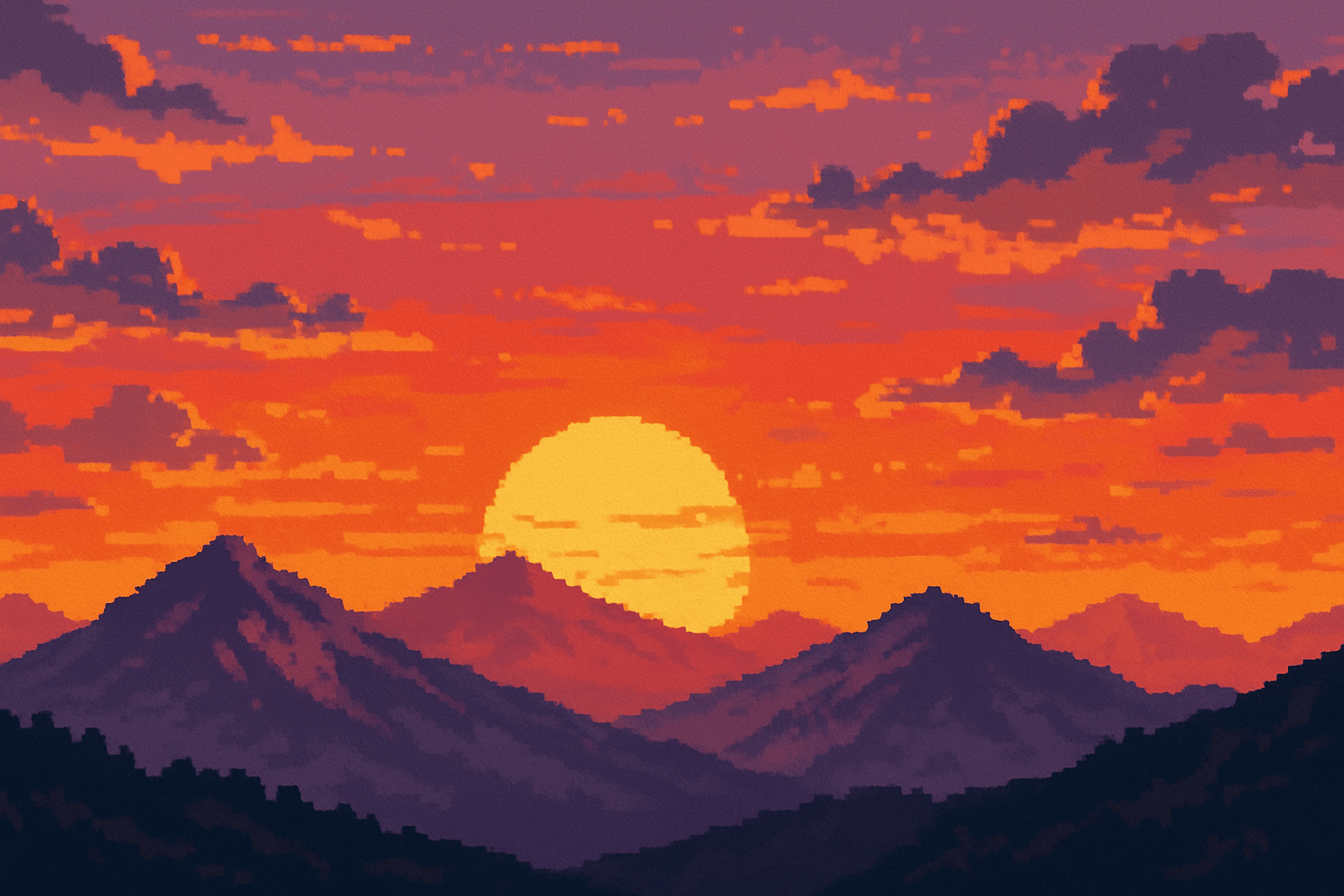

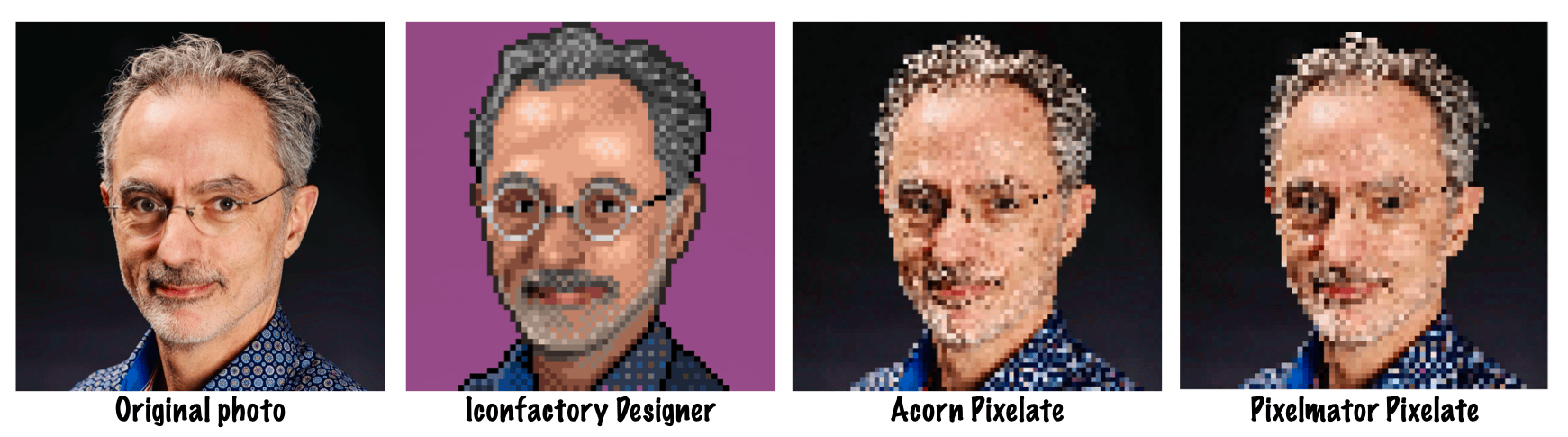

Not long ago, I commissioned a digital artist to create a pixel art version of a professional photo of myself. I’ve always loved this art style. In a world full of ultra-HD displays and insanely powerful processors, pixel art feels like a charming rebellion. It reminds me of where it all started — my early days with computers, video games, and the magic of digital discovery. (If you’re curious about the history of pixel art, there’s a good primer on Wikipedia.)

So I reached out to Gedeon Maheux, a designer at Iconfactory. He has worked on numerous projects for various apps, games, and brands. He’s not just a designer — he’s a real artist. I paid $120, and sure enough, two weeks later, the finished portrait arrived by email.

I love the result. It looks like me, but through a stylized, charming lens — part retro, part comic book. So I showed it to my 21-year-old son, expecting some enthusiasm. Instead, I got a reality check:

Me: “Look! I had this made by an artist — a pixel art version of me. Pretty cool, right?”

Him: “Wait, seriously? You paid for that?”

Me: “Yeah, $120.”

Him: “You could have done that in Photoshop in like five minutes…”

Huh.😳

To test this, I opened Pixelmator and attempted to reproduce the same effect. Here’s the result:

And yeah, it took about five minutes. But it wasn’t great. The shape of my face is there, but the details? Gone. My eyes? Basically blobs. There’s no expression, no subtlety. Just pixels, arranged by an algorithm with no understanding of what it’s looking at.

That’s when it hit me: this is precisely what happens with AI-generated content, especially when it comes to writing, summarizing, or mimicking something creative. You get the broad strokes. The structure might make sense. But the nuance? The personality? The intent? Often gone.

And we’re seeing more and more of this — AI-generated text, AI-generated images, AI-generated ideas — flooding the internet. Which leads me to a second thought: what happens when future AI models are trained not on original human-made content, but on AI-generated content that was itself trained on other AI-generated content?

Imagine giving the artist the pixelated version of my photo and asking him to “improve it” without ever seeing the original. You can guess how that would turn out.

That’s the real risk we’re facing with the unchecked rise of generative AI. If we keep training machines on content made by other machines, we’ll end up with generations of output that get further and further from the richness, imperfection, and depth of human creativity. We’ll lose the fine details — the equivalent of the eyes in my portrait.

This isn’t a rant against AI. I use it. I’m fascinated by it. But there’s a difference between using a tool and replacing the creator. And when the human input fades away — when creativity is reduced to algorithmic mimicry — we start losing something essential.

Food for thought.

P.S.: Iconfactory, the company behind the artist I worked with, has been struggling since the rise of generative AI. Here’s a post from one of their team members about it. Worth a read.

P.S. #2: This article was first written and published in my native language, French and was translated into English using ChatGPT.